Communicating science: Sending the right message to the right audience

Abstract

Introduction

Materials and methods

Statistical analyses

Results

Trust versus use

| Trust | Use | |||||

|---|---|---|---|---|---|---|

| Social media | Academic journals | Personal experience | Social media | Academic journals | Personal experience | |

| R 2 = 0.14, s = 4, wt = 0.81 | R 2 = 0.19, s = 4, wt = 0.81 | R 2 = 0.04, s = 2, wt = 0.99 | R 2 = 0.20, s = 3, wt = 0.91 | R 2 = 0.35, s = 2, wt = 0.99 | R 2 = 0.16, s = 3, wt = 0.84 | |

| Intercept | −0.14 (−0.74 to 0.47) | −0.43 (−1.17 to 0.31) | −0.031 (−0.31 to 0.24) | 0.090 (−0.48 to 0.66) | 0.43 (−0.14 to 1.00) | 0.041 (−0.47 to 0.56) |

| Age 21–25 | 0.33 (−0.18 to 0.85) | 0.29 (−0.17 to 0.73) | 0.17 (−0.31 to 0.66) | 0.26 (−0.25 to 0.77) | ||

| Age 26–30 | 0.49 (0 to 0.98) | 0.27 (−0.16 to 0.70) | 0.35 (−0.12 to 0.82) | 0.14 (−0.35 to 0.63) | ||

| Age 31–35 | 0.38 (−0.13 to 0.88) | 0.098 (−0.34 to 0.53) | −0.014 (−0.51 to 0.48) | 0.32 (−0.18 to 0.82) | ||

| Age 36–40 | 0.010 (−0.42 to 0.62) | 0.15 (−0.30 to 0.60) | −0.10 (−0.62 to 0.41) | 0.29 (−0.23 to 0.80) | ||

| Age 41–45 | 0.025 (−0.52 to 0.58) | 0.17 (−0.31 to 0.65) | −0.28 (−0.83 to 0.27) | −0.23 (−0.77 to 0.32) | ||

| Age 46–50 | 0.24 (−0.34 to 0.82) | −0.010 (−0.52 to 0.50) | 0.067 (−0.50 to 0.64) | −0.013 (−0.59 to 0.57) | ||

| Age 51–55 | −0.16 (−0.75 to 0.43) | −0.48 (−0.97 to 0) | −0.58 (−1.17 to −0.02) | −0.34 (−0.90 to 0.22) | ||

| Age 56–60 | −0.54 (−1.26 to 0.18) | 0.015 (−0.58 to 0.61) | −0.81 (−1.51 to −0.11) | −0.42 (−1.12 to 0.27) | ||

| Age 61–65 | −0.43 (−1.08 to 0.22) | −0.25 (−0.79 to 0.30) | −1.01 (−1.65 to −0.37) | −0.56 (−1.19 to 0.08) | ||

| Age 66+ | −0.16 (−0.76 to 0.43) | −0.35 (−0.86 to 0.17) | −0.87 (−1.45 to −0.29) | 0.22 (−0.36 to 0.80) | ||

| Resource manager | −0.29 (−0.84 to 0.27) | −0.380 (−0.82 to 0.06) | −0.37 (−0.89 to 0.15) | −0.80 (−1.18 to −0.42) | ||

| Non-academic scientist | −0.43 (−0.82 to −0.03) | −0.017 (−0.35 to 0.31) | −0.053 (−0.44 to 0.33) | −0.45 (−0.74 to −0.16) | ||

| General public | −0.42 (−0.77 to −0.06) | −0.37 (−0.73 to −0.02) | −0.35 (−0.70 to −0.01) | −1.25 (−1.56 to −0.94) | ||

| Policymaker | 0.60 (−0.30 to 1.50) | 0.19 (−0.63 to 1.01) | 0.76 (−0.17 to 1.69) | −0.88 (−1.59 to −0.17) | ||

| Student | −0.080 (−0.43 to 0.27) | −0.024 (−0.33 to 0.28) | −0.22 (−0.60 to 0.16) | −0.56 (−0.82 to −0.29) | ||

| General trust | 0.54 (−0.30 to 1.39) | 0.67 (−0.01 to 1.34) | 0.19 (−0.57 to 0.95) | 0.41 (−0.35 to 1.17) | 0.37 (−0.22 to 0.95) | 0.27 (−0.49 to 1.03) |

Note: Significant predictors (where confidence intervals do not overlap zero) are depicted in bold. R 2 describes the goodness of fit of the global model for each response variable, whereas s and wt represent the number of models incorporated in the final averaged model and their cumulative AIC weight, respectively. Only values from predictors incorporated into the final averaged model for each response variable are included.

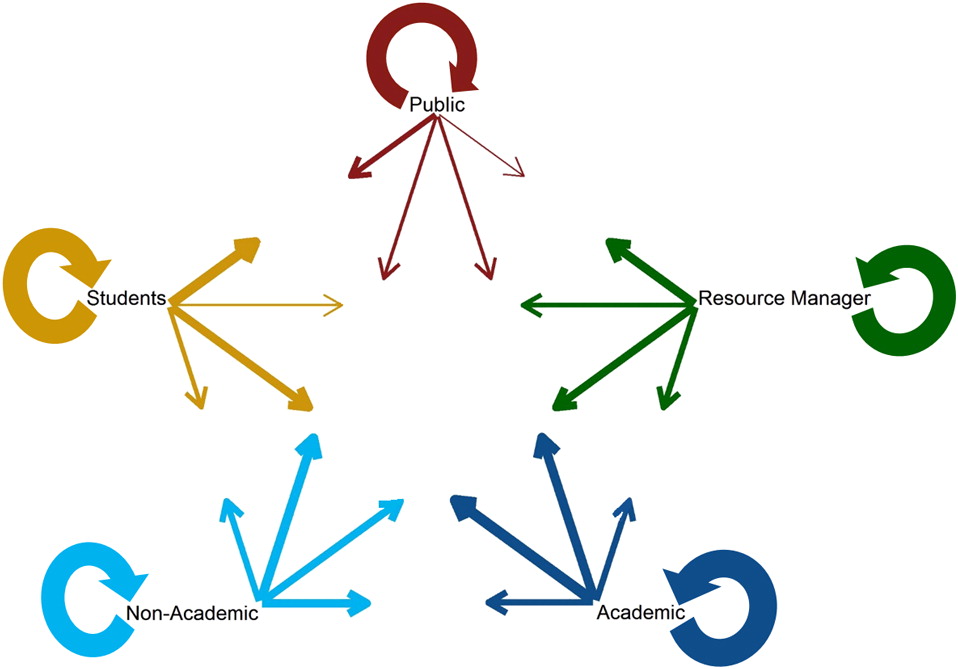

Communication within and among groups

Discussion

Trust versus use

Communication among groups

Conclusions

Acknowledgements

References

Supplementary material

- Download

- 107.30 KB

- Download

- 34.62 KB

- Download

- 15.83 KB

- Download

- 12.68 KB

Information & Authors

Information

Published In

History

Copyright

Data Availability Statement

Key Words

Sections

Subjects

Authors

Author Contributions

Competing Interests

Metrics & Citations

Metrics

Other Metrics

Citations

Cite As

Export Citations

If you have the appropriate software installed, you can download article citation data to the citation manager of your choice. Simply select your manager software from the list below and click Download.